import statsmodels.api as sm

dataset = sm.datasets.get_rdataset("Duncan", "carData")

df = dataset.data

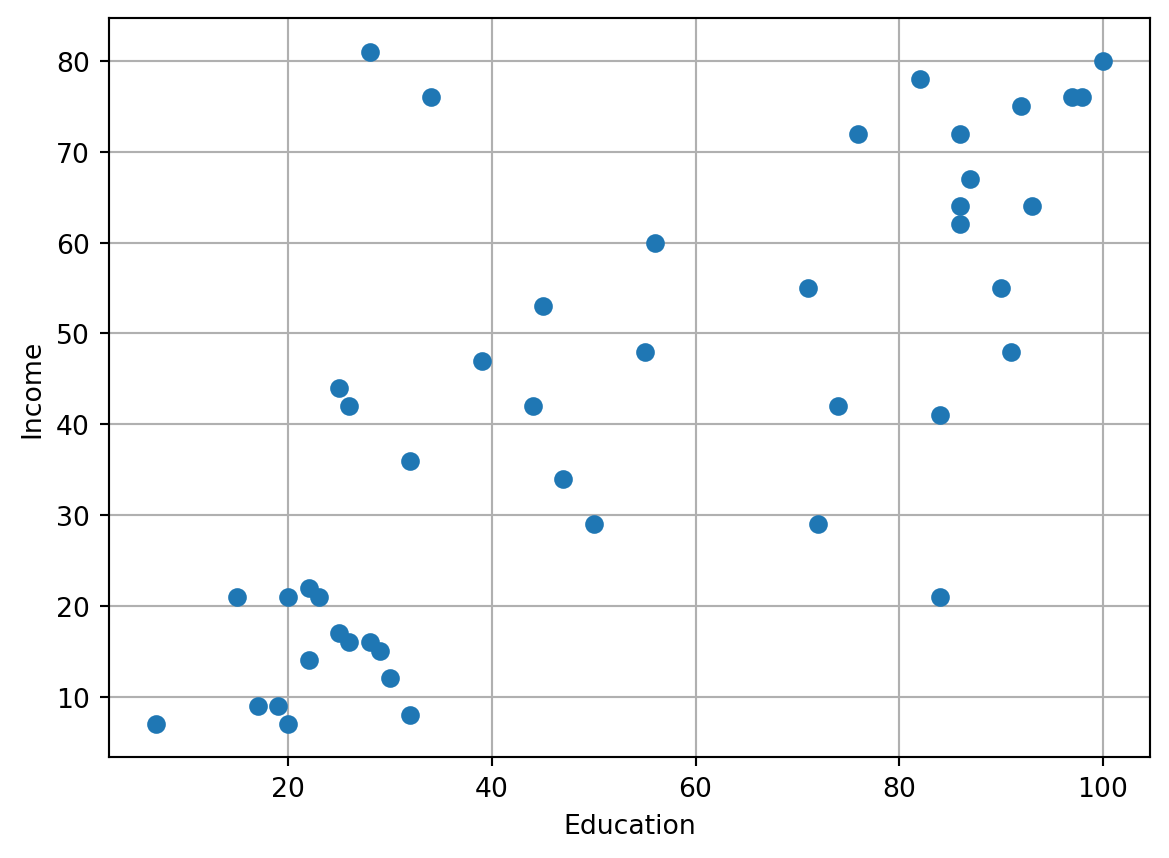

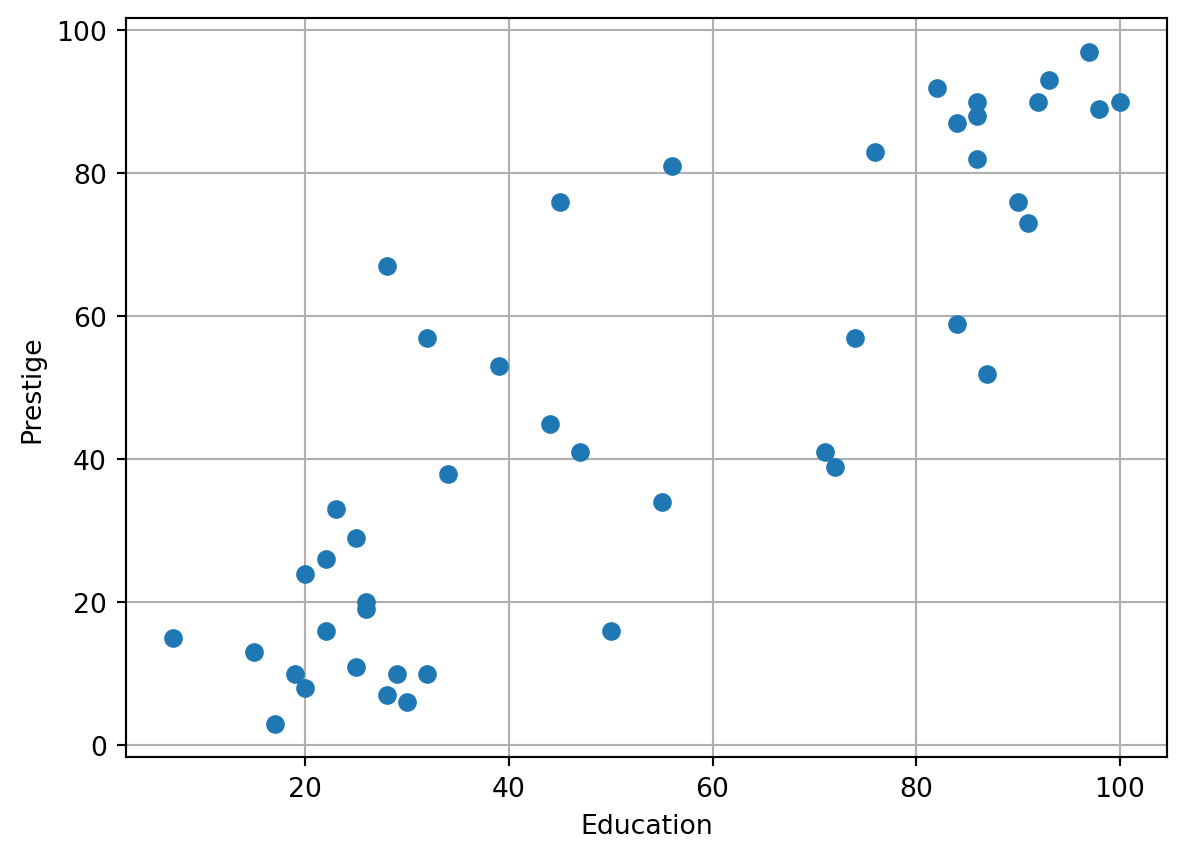

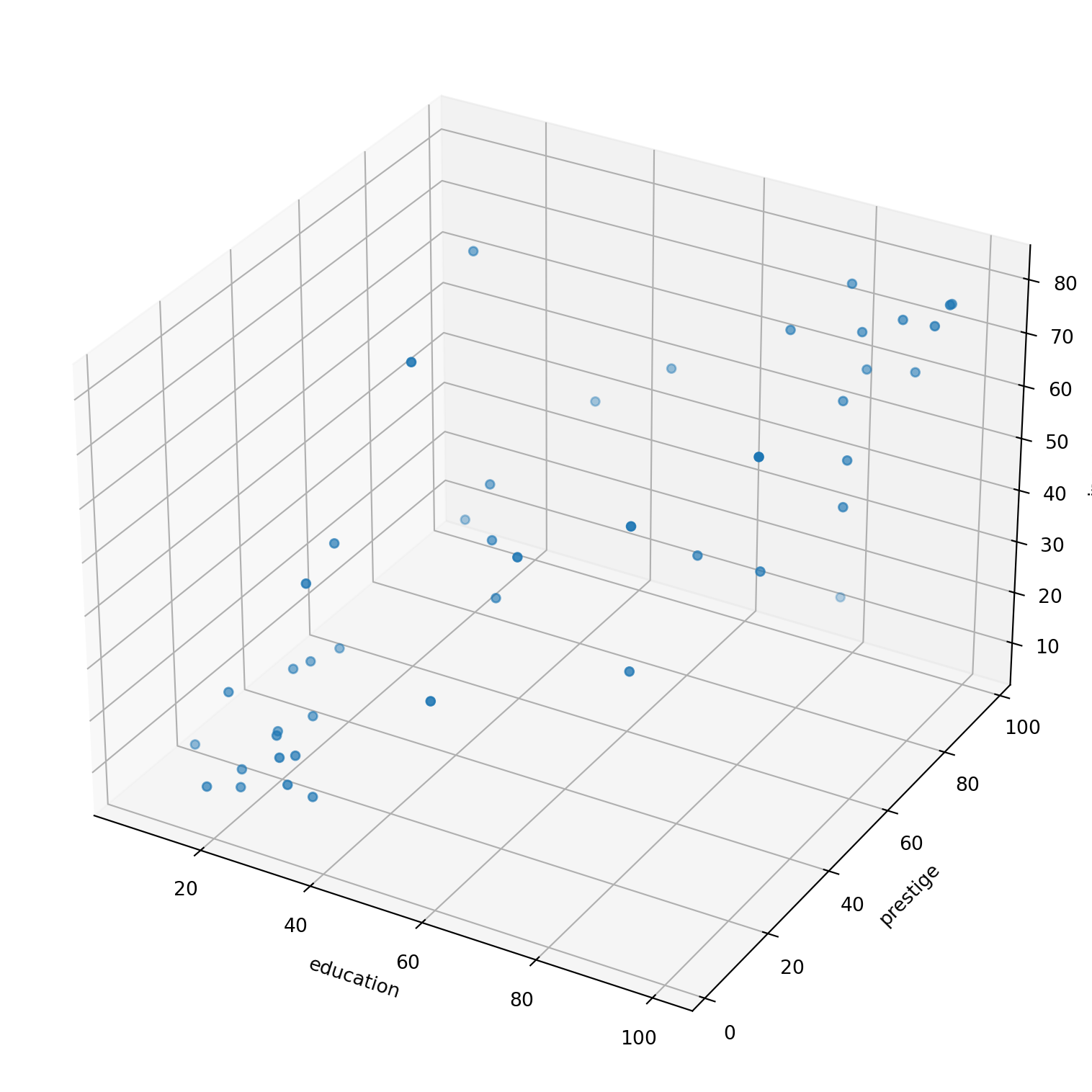

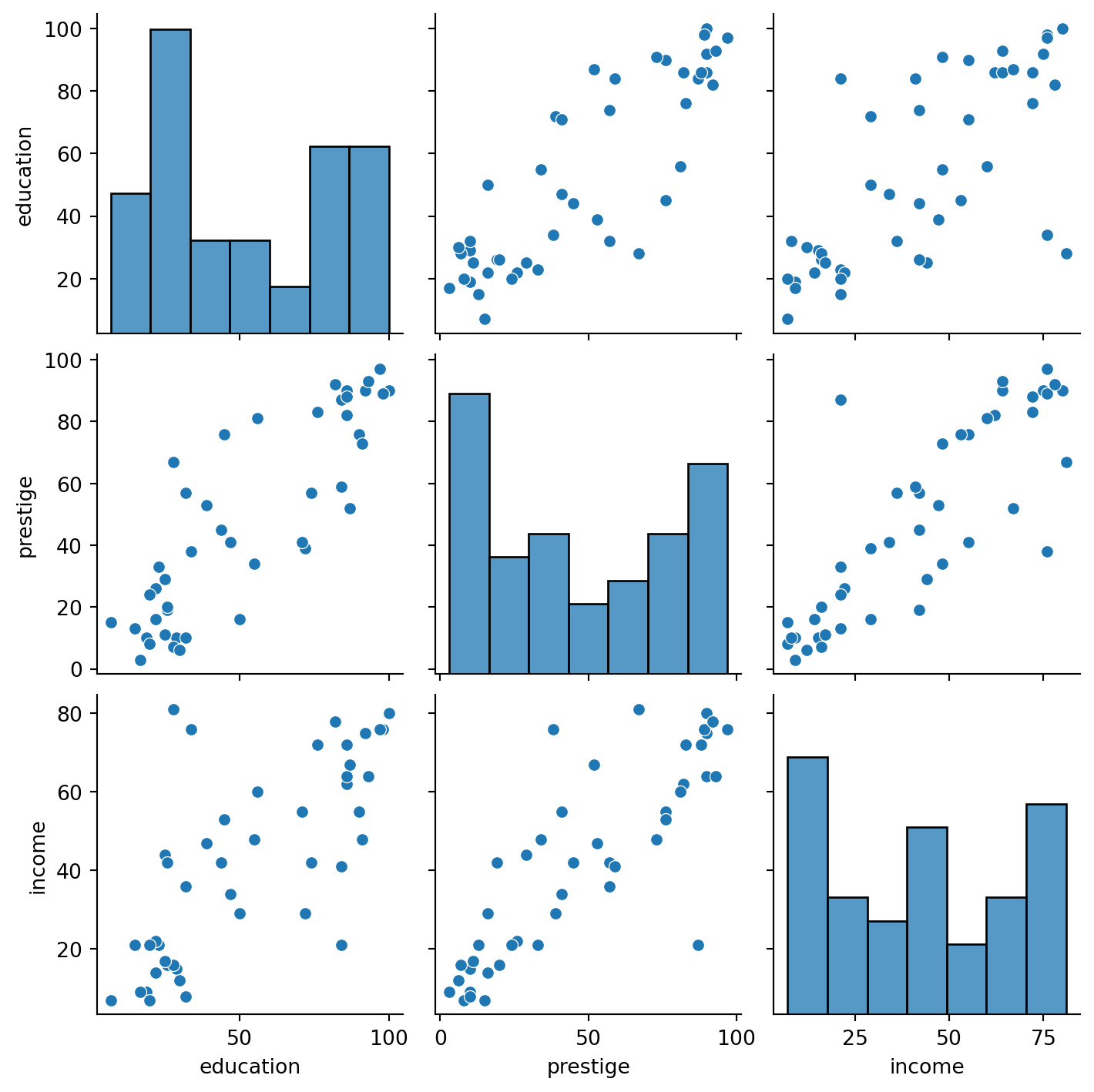

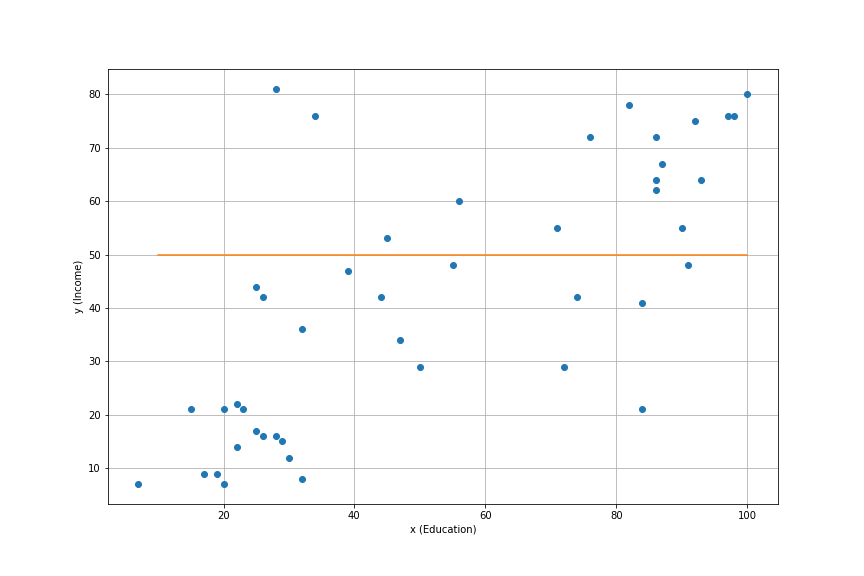

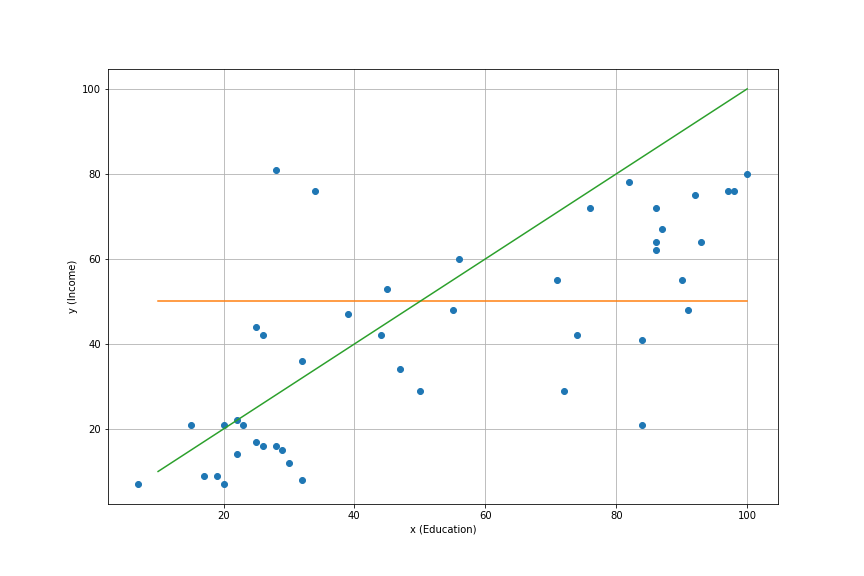

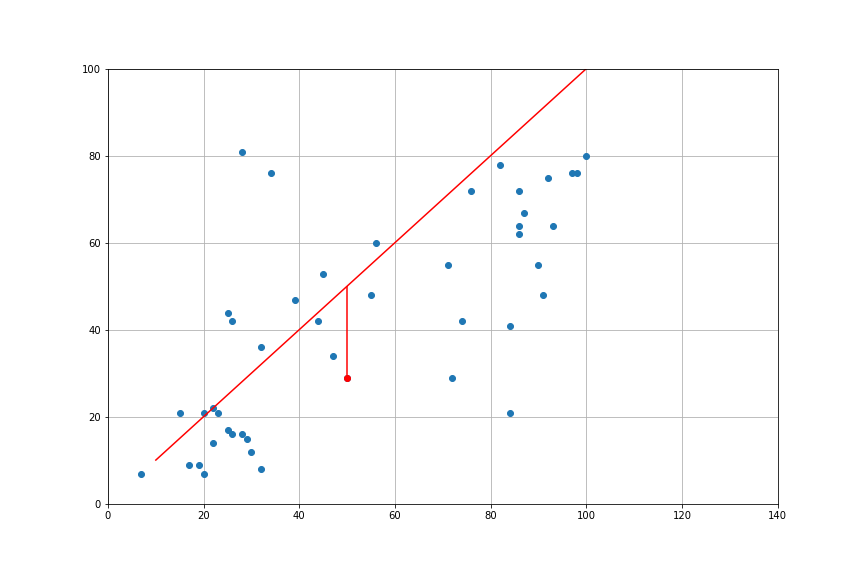

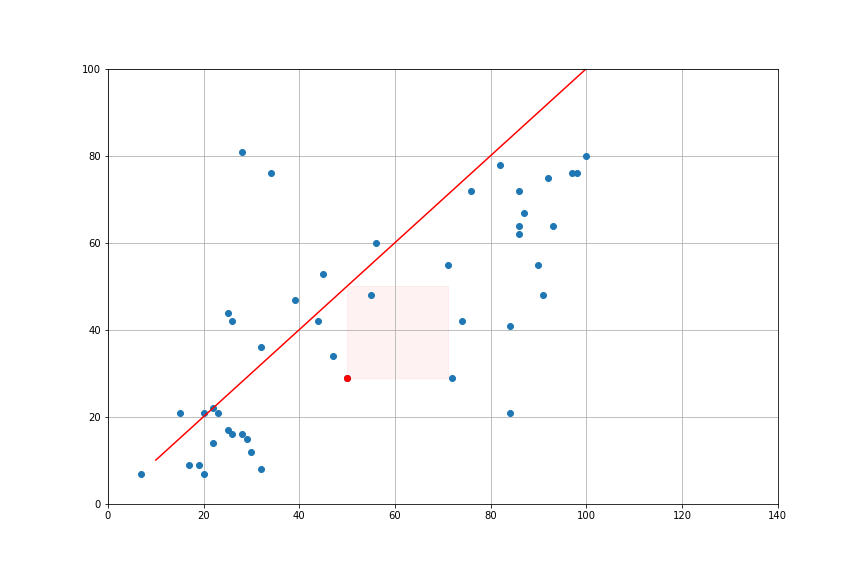

df.head()| type | income | education | prestige | |

|---|---|---|---|---|

| rownames | ||||

| accountant | prof | 62 | 86 | 82 |

| pilot | prof | 72 | 76 | 83 |

| architect | prof | 75 | 92 | 90 |

| author | prof | 55 | 90 | 76 |

| chemist | prof | 64 | 86 | 90 |