graph LR

U[Unobserved Confounder] -->|?| X[Treatment]

U -->|?| Y[Outcome]

X -->|beta| Y

style U fill:#f9f,stroke:#333,stroke-width:2px,stroke-dasharray: 5 5

Introduction to Instrumental Variables

Data-Based Economics, ESCP, 2025-2026

2026-01-28

Categorical Variables

Data

Our multilinear regression: \[y = \alpha + \beta x_1 + \cdots + \beta x_n\]

So far, we have only considered real variables: (\(x_i \in \mathbb{R}\)).

Like: \[x_{\text{gdp}} = \alpha + \beta_1 x_{\text{unemployment}} + \beta_2 x_{\text{inflation}}\]

Data

How do we deal with the following cases?

- binary variable: \(x\in \{0,1\}\) (or \(\{True, False\}\))

- ex: \(\text{gonetowar}\), \(\text{hasdegree}\)

- categorical variable:

- ex: survey result (0: I don’t know, 1: I strongly disagree, 2: I disagree, 3: I agree, 4: I strongly agree)

- there is no ranking of answers

- when there is ranking: hierarchical index

- nonnumerical variables:

- ex: (flower type: \(x\in \text{myosotis}, \text{rose}, ...\))

Binary variable

- Nothing to be done: just make sure variables take values 0 or 1. \[y_\text{salary} = \alpha + \beta x_{\text{gonetowar}}\]

- Interpretation:

- having gone to war is associated with a \(\beta\) increase (or decrease?) in salary (still no causality)

Categorical variable

Look at the model: \[y_{\text{CO2 emission}} = \alpha + \beta x_{\text{banish cars}} \]

where \(y_{\text{CO2 emission}}\) is an individual’s CO2 emissions and \(x_{\text{banishment support}}\) is the response the the question Do you suport the banishment of petrol cars?.

Response is coded up as:

- 0: Strongly disagree

- 1: Disagree

- 2: Neutral

- 3: Agree

- 4: Strongly agree

If the variable was used directly, how would you intepret the coefficient \(\beta\) ?

- index is hierarchical

- but the distances between 1 and 2 or 2 and 3 are not comparable…

Hierarchical index

We use one dummy variable per possible answer.

| \(D_{\text{Strongly Disagree}}\) | \(D_{\text{Disagree}}\) | \(D_{\text{Neutral}}\) | \(D_{\text{Agree}}\) | \(D_{\text{Strongly Agree}}\) |

|---|---|---|---|---|

| 1 | 0 | 0 | 0 | 0 |

| 0 | 1 | 0 | 0 | 0 |

| 0 | 0 | 0 | 0 | 0 |

| 0 | 0 | 0 | 1 | 0 |

| 0 | 0 | 0 | 0 | 1 |

Hierarchical index

- Values are linked by the specific dummy coding.

- the choice of the reference group (with 0) is not completely neutral

- for linear regressions, we can ignore its implications

- it must be frequent enough in the data

- the choice of the reference group (with 0) is not completely neutral

- Note that hierarchy is lost. The same treatment can be applied to non-hierachical variables

- Now our variables are perfectly colinear:

- we can deduce one from all the others

- we drop one from the regression: the reference group TODO

Hierarchical index (2)

\[y_{\text{CO2 emission}} = \alpha + \beta_1 x_{\text{strdis}} + \beta_2 x_{\text{dis}} + \beta_3 x_{\text{agr}} + \beta_4 x_{\text{stragr}}\]

- Interpretation:

- being in the group which strongly agrees to the banishment support’s claim is associated with an additional \(\beta_4\) increase in CO2 consumption compared with members of the neutral group

Nonnumerical variables

- What about nonnumerical variables?

- When variables take nonnumerical variables, we convert them to numerical variables.

- Example:

| activity | code |

|---|---|

| massage therapist | 1 |

| mortician | 2 |

| archeologist | 3 |

| financial clerks | 4 |

-

Then we convert to dummy variables exactly like hierarchical indices

- here \(\text{massage therapist}\) is taken as reference

| \(D_{\text{mortician}}\) | \(D_{\text{archeologist}}\) | \(D_{\text{financial clerks}}\) |

|---|---|---|

| 1 | 0 | 0 |

| 0 | 1 | 0 |

| 0 | 0 | 1 |

Hands-on

Use statsmodels/linearmodels to create dummy variables with formula API.

- Replace

- by:

There is an options to choose the reference group

Causality and Endogeneity

What is causality?

Clear? Huh! Why a four-year-old child could understand this report! Run out and find me a four-year-old child, I can’t make head or tail of it.

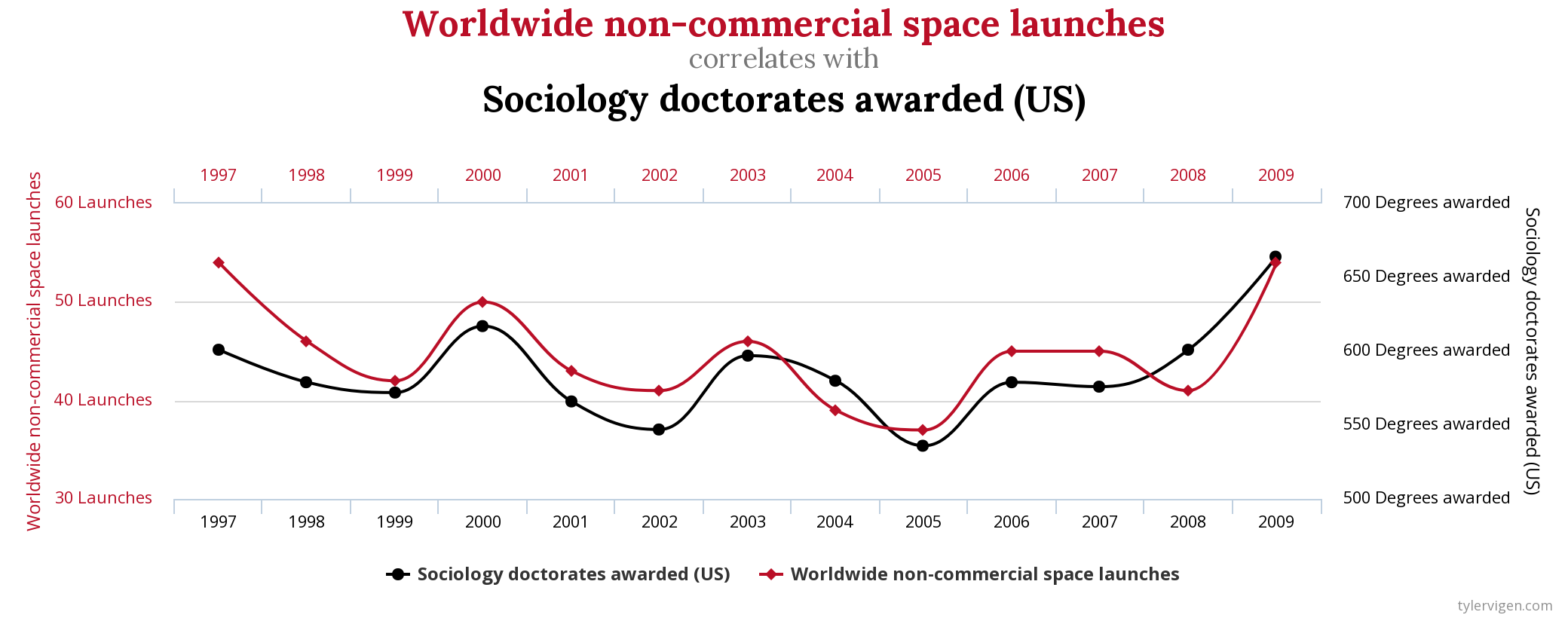

Spurious correlation

Spurious Correlation

- We have seen spurious correlation before

- it happens when two series comove without being actually correlated

- Also, two series might be correlated without one causing the other

- ex: countries eating more chocolate have more nobel prices…

Definitions?

But how do we define

- correlation

- causality

?

Both concepts are actually hard to define:

- in statistics (and econometrices) they refer to the generating process

- if the data was generated again, would you observe the same relations?

For instance correlation between \(X\) and \(Y\) is just the average correlation taken over many draws \(\omega\) of the data: \[E_{\omega}\left[ (X-E[X])(Y-E[Y])\right]\]

How do we define causality (1)

- In math, we have implication: \(A \implies B\)

- applies to statements that can be either true or false

- given \(A\) and \(B\), \(A\) implies \(B\) unless \(A\) is true and \(B\) is false

- paradox of the drinker: at any time, there exists a person such that: if this person drinks, then everybody drinks

- In a mathematical universe taking values \(\omega\), we can define causality between statement \(A(\omega)\) and \(B(\omega)\) as : \[\forall \omega, A(\omega) \implies B(\omega)\]

How do we define causality (2)

But causality in the real world is problematic

Usually, we observe \(A(\omega)\) only once…

Example:

- state of the world \(\omega\): 2008, big financial crisis, …

- A: Ben Bernanke chairman of the Fed

- B: successful economic interventions

- Was Ben Bernanke a good central banker?

- Impossible to say.

Causality in Statistics

Statistical definition of causality

Variable \(A\) causes \(B\) in a statistical sense if

- \(A\) and \(B\) are correlated

- \(A\) is known before \(B\)

- correlation between \(A\) and \(B\) is unaffected by other variables

- There are other related statistical definitions:

- like Granger causality…

- … but not for this course

The Problem: Endogeneity

The Ideal Regression \[ y = \alpha + \beta x + \epsilon \] - We want \(\beta\) to be the causal effect of \(x\) on \(y\). - Assumption: \(corr(x, \epsilon) = 0\) (Exogeneity)

The Reality: Endogeneity

- Often, \(x\) is correlated with the error term \(\epsilon\).

- Why?

- Omitted Variable Bias: A third variable \(U\) affects both \(x\) and \(y\).

- Reverse Causality: \(y\) causes \(x\).

- Measurement Error.

- Result: \(\beta\) is biased! You cannot trust it.

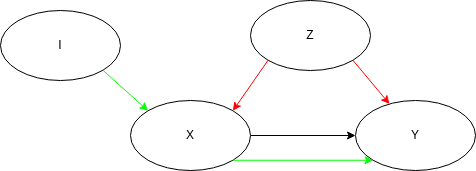

DAG Representation (Problem)

We can represent this problem using a Causal Graph (DAG):

- We want to measure the arrow \(X \to Y\).

- But the path \(X \leftarrow U \to Y\) creates a “backdoor” correlation.

- Since we don’t observe \(U\), we can’t control for it.

Experiments

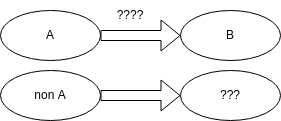

Factual and counterfactual

- Suppose we observe an event A

- A: a patient is administered a drug, government closes all schools during Covid

- We observe a another event B

- B: the patient recovers, virus circulation decreases

- To interpret B as a consequence of A, we would like to consider the counter-factual:

- a patient is not administered a drug, government doesn’t close schools

- patient does not recover, virus circulation is stable

An important task in econometrics is to construct a counter-factual

- as the name suggests is it sometimes never observed!

Scientific Experiment

- In science we establish causality by performing experiments

- and create the counterfactual

- A good experiment is reproducible

- same variables

- same state of the world (other variables)

- reproduce several times (in case output is noisy or random)

- Change one factor at a time

- to create a counter-factual

Measuring effect of treatment

- Assume we have discovered two medications: R and B

- Give one of them (R) to a patient and observe the outcome

- Would would have been the effect of (B) on the same patient?

- ????

- What if we had many patients and let them choose the medication?

Maybe the effect would be the consequence of the choice of patients rather than of the medication?

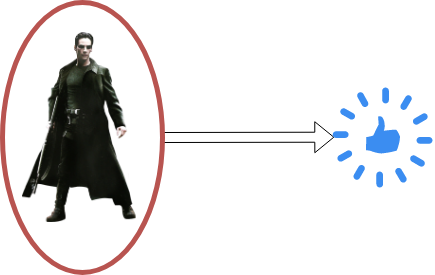

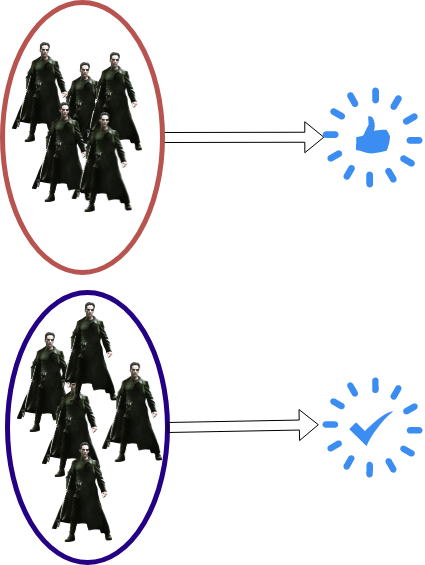

An exmple from behavioural economics

Example: cognitive dissonance

- Experiment in GATE Lab (ENS Lyon)

- Volunteers play an investment game.

- They are asked beforehand whether they support OM, PSG, or none.

- Experiment 1:

- Before the experiment, randomly selected volunteers are given a football shirt of their preferred team (treatment 1)

- Other volunteers receive nothing (treatment 0)

- Result:

- having a football shirt seems to boost investment performance…

Experiment 2: subjects are given randomly a shirt of either Olympique de Marseille or PSG.

Result:

- Having the good shirt improves performance.

- Having the wrong one deteriorates it badly.

- How would you code up this experiment?

- Can we conclude on some form of causality?

Formalisation of the problem

Cause (A): two groups of people

- those given a shirt (treatment 1)

- those not given a shirt (treatment 0)

Possible consequence (B): performance

Take a given agent Alice: she performs well with a PSG shirt.

- maybe she is a good investor?

- or maybe she is playing for her team?

Let’s try to have her play again without the football shirt

- now the experiment has changed: she has gained experience, is more tired, misses the shirt…

- it is impossible to get a perfect counterfactual (i.e. where only A changes)

- Let’s take somebody else then? Bob was really bad without a PSG shirt.

- he might be a bad investor? or he didn’t understand the rules?

- some other variables have changed, not only the treatment

- How to make a perfect experiment?

- Choose randomly whether assigning a shirt or not

- by construction the treatment will not be correlated with other variables

Randomized Control Trial

Randomized Control Trial (RCT)

The best way to ensure that treatment is independent from other factors is to randomize it.

In medecine

- some patients receive the treatment (red pill)

- some other receive the control treatment (blue pill / placebo)

In economics:

- randomized field experiments

- randomized phase-ins for new policies

- very useful for policy evaluation

Natural experiment

Natural Experiment

A natural experiment satisfies conditions that treatment is assigned randomly

- without interference by the econometrician

An exemple of a Natural Experiment:

- Gender Biases: Evidence from a Natural Experiment in French Local Elections

- (Eymeoud & Vertier, 2018)

Case Study: Eymeoud & Vertier (2018)

The Context

- 2015 French Departmental Elections.

- Major reform: strict parity.

- Candidates must run in Pairs (Binôme: 1 Man + 1 Woman).

- Voters accept the whole pair (no panachage).

The Natural Experiment

- How to list the names? (M-F or F-M?)

- Rule: Determined by Alphabetical Order.

- This is “Random” (Exogenous)!

- Whether the Woman is 1st or 2nd is uncorrelated with her quality/ability.

- Hypothesis: Being listed first signals being the “Leader”.

- Result:

- Pairs with Woman first received 1.5% fewer votes from Right-wing voters.

- No significant effect for Left-wing voters.

- Conclusion: Evidence of discriminatory preferences among certain voters.

Instrumental variables

Example

Lifetime Earnings and the Vietnam Era Draft Lottery, by JD Angrist

Fact:

- veterans of the vietnam war (55-75) earn (in the 80s) an income that is 15% less in average than those who didn’t go to the war.

- What can we conclude?

- Hard to say: maybe those sent to the war came back with lower productivity (because of PTSD, public stigma, …)? maybe they were not the most productive in the first place (unobserved selection bias)?

Problem (for the economist):

- we didn’t send people to war randomly

Genius idea:

- here is a variable which randomly affected whether people were sent: the Draft

- between 1947, and 1973, a lottery was run to determine who would go to war

- the draft number was determined, based on date of birth, and first letters of name

- and was correlated with the probability that a given person would go to war

- and it was so to say random or at least independent from anything relevant to the problem

Can we use the Draft to generate randomness ?

Problem

- Take the linear regression: \[y = \alpha + \beta x + \epsilon\]

- \(y\): salary

- \(x\): went to war

- We want to establish causality from x to y

- we would like to interpret \(x\) as the “treatment”

- But there can be unobserved confounding factors:

- variable \(z\) which causes both x and y

- exemple: socio-economic background, IQ, …

- If we could identify \(z\) we could control for it: \[y = \alpha + \beta_1 x + \beta_2 z + \epsilon\]

- we would get a better predictor of \(y\) but more uncertainty about \(\beta_1\) (\(x\) and \(z\) are correlated)

The IV Solution (DAG)

We introduce an Instrument (\(Z\)) to break the link.

graph LR

U[Unobserved Confounder] -.-> X[Treatment]

U -.-> Y[Outcome]

X -->|beta| Y

Z[Instrument] -->|Relevance| X

Z -.->|Exclusion| Y

Z x-.-x U

style U fill:#f9f,stroke:#333,stroke-width:2px,stroke-dasharray: 5 5

style Z fill:#9f9,stroke:#333,stroke-width:4px

linkStyle 4 stroke:red,stroke-width:2px;

linkStyle 5 stroke:red,stroke-width:2px;

The Logic:

- Verify \(Z\) causes variation in \(X\).

- Use only that variation in \(X\) (caused by \(Z\)) to predict \(Y\).

- Since \(Z\) is uncorrelated with \(U\), this “clean” variation is safe to use.

Conditions for a Valid Instrument

To be valid, an instrument \(Z\) must satisfy two key conditions:

- Relevance: \(Cov(Z, X) \neq 0\)

- The instrument must actually affect the treatment.

- Testable: Regress \(X\) on \(Z\) and check significance (F-stat > 10).

- Exclusion Restriction: \(Cov(Z, \epsilon) = 0\)

- The instrument affects \(Y\) ONLY through \(X\).

- It must NOT be correlated with the omitted variable \(U\).

- NOT Testable: Must be argued from theory/institution.

Two Stage Least Squares (2SLS)

How do we estimate this? In two stages:

Stage 1: Predict the Treatment Isolate the part of \(X\) driven by \(Z\). \[ \hat{x} = \alpha_0 + \beta_0 z \]

Stage 2: Estimate the Effect Use the predicted treatment \(\hat{x}\) (which is “clean”) to explain \(Y\). \[ y = \alpha + \beta_{IV} \hat{x} + \epsilon \]

- Result: \(\beta_{IV}\) is a consistent estimator of the causal effect.

- Intuition: \(\beta_{IV} \approx \frac{\text{Effect of Z on Y}}{\text{Effect of Z on X}}\) (Wald Estimator)

Choosing a good instrument

Choosing an instrumental variable

A good instrument when trying to explain y by x, is a variable that is correlated to the treatment (x) but does not have any effect on the outcome of interest (y), appart from its effect through x.

In particular, it should be uncorrelated from any potential confounding factor (whether observed or unobserved).

In practice

- Both

statsmodelsandlinearmodelssupport instrumental variables- library (look for IV2SLS)

- Library

linearmodelshas a handy formula syntax:salary ~ 1 + [war ~ draft]- API is similar but not exactly identical to statsmodels

- Example from the doc

Conclusion

Summary

- Causality \(\neq\) Correlation: We need to worry about endogeneity (confounders, reverse causality).

- Gold Standard: Randomized Control Trials (RCT) solve this by forcing \(X\) to be random.

- Instrumental Variables (IV):

- We look for a “natural experiment” (\(Z\)) that mimics an RCT.

- \(Z\) must be Relevant (\(Z \to X\)) and Exogenous (\(Z \perp \epsilon\)).

- Implementation: Use Two-Stage Least Squares (2SLS) supported by libraries like

linearmodels

Warning

Finding a valid instrument is hard. A bad instrument (weak or endogenous) can be worse than OLS!